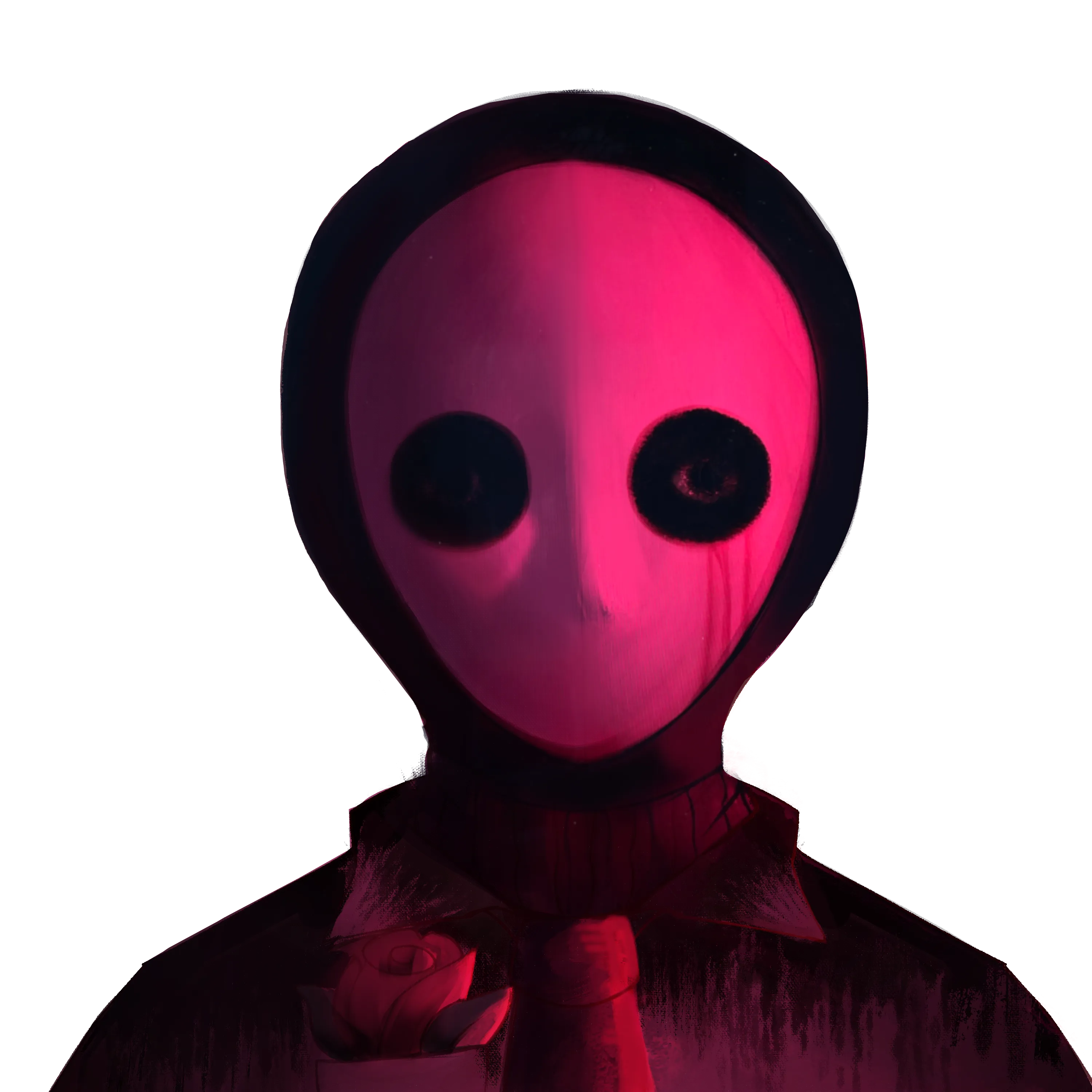

AI Researcher

Working on evolutionary ML with a focus on adaptive multi-objective functions and perserving weights while add/sub/modifying features parameters. Curious about Riemannian manifolds, chaotic blackbox uncertainty, signal processing, and stochastic calculus.

Articles

-

Overcoming Mental Warfare

When does pain stop? This constant back and forth of two titans colliding conscious never seens to let up...Will I ever escape? I must confront them, even if it means I will lose myself in the process. Fear not death but to live a life with undecisiveness.

-

Numbing Persistance

There is nothing you can do but endure and persist to grasp those dreams from many moons ago. It feels mind numbing, all this effort. Maybe one day you'll reach it but at what cost? Was it worth it? All that pain and suffering, for what cause? Are you fighting to survive or is this thought play for you? For me, it's survival. Without it I would have nothing, but to rot in my mind as time drifts by.

-

A Warrior's Journey

Deciding to become who you want to be is no easy feat. You are destined to battle the strongest of enemies on that path, sometimes with friends but majority of the time alone. What does the mind of one that is walking this path for the second time look like?

Career Updates

18/10

- Woke up ~8:30am after falling asleep w/ 20mg of melatonin @ ~23:00 (since 1 melatonin didn't work the night before...). Was extremely bed riden. Didn't want to get up at all and just felt like not living. Only at ~13:00 have I actually started to exist properly

Podcast

I host a tecnhical podcast about math, science, crypto, HFT/MEV and infosec. I have experience in these fields which enables me to ask deeper questions than other podcasts with hosts with surface elvel knowledge.

Explore →Talks

Listen to my first ever interview when I was just starting out in my career! I talked about MEV and reverse engineering :)

Explore →